Object Tracking via Dual Linear

Structured SVM and Explicit Feature Map

Jifeng Ning,

Jimei Yang, Shaojie Jiang, Lei Zhang and Ming-Hsuan Yang

Abstract: Structured support vector machine

(SSVM) based methods have demonstrated encouraging performance in recent object

tracking benchmarks. However, the complex and expensive optimization limits

their deployment in real-world applications. In this paper, we present a simple

yet efficient dual linear SSVM (DLSSVM) algorithm to enable fast learning and

execution during tracking. By analyzing the dual variables, we propose a primal

classifier update formula where the learning step size is computed in closed

form. This online learning method significantly improves the robustness of the

proposed linear SSVM with lower computational cost. Second, we approximate the

intersection kernel for feature representations with an explicit feature map to

further improve tracking performance. Finally, we extend the proposed DLSSVM

tracker with multi-scale estimation to address the ``drift" problem.

Experimental results on large benchmark datasets with 50 and 100 video

sequences show that the proposed DLSSVM tracking algorithm achieves

state-of-the-art performance.

1.

Publication

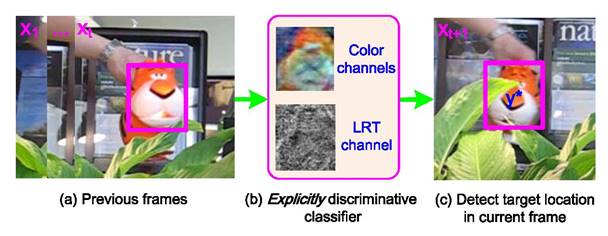

Figure 1 the proposed DLSSVM tracker.

Jifeng Ning,

Jimei Yang, Shaojie Jiang, Lei Zhang and Ming-Hsuan Yang. Object Tracking via

Dual Linear Structured SVM and Explicit Feature Map, 2016 IEEE Conference on Computer

Vision and Pattern Recognition (CVPR), pp. 4266-4274, Las Vegas, USA,

2016. [pdf (605KB)], [matlab sources codes (544kb)]

2. Experimental results

2.1 Analysis of Proposed DLSSVM and

Related SSVM Trackers

|

Table 1. Characteristics of SSVM trackers. NU means no unary

representation for the features |

|||||||

|

SSVM trackers |

closed form

solution |

kernel type |

feature type |

feature

dimensions |

high dimension

feature |

non-linear

decesion |

Discriminative

classifier |

|

SSG |

no |

linear |

image feature |

1600 |

yes |

no |

explicit |

|

Struck |

yes |

Gaussian |

Haar-like |

192 |

no |

yes |

implicit |

|

Linear-Struck-NU |

yes |

linear |

image feature |

1600 |

yes |

no |

explicit |

|

Linear-Struck |

yes |

linear |

image feature |

6400 |

yes |

yes |

explicit |

|

DLSSVM-NU |

yes |

linear |

image feature |

1600 |

yes |

no |

explicit |

|

DLSSVM |

yes |

linear |

image feature |

6400 |

yes |

yes |

explicit |

|

Table 2. Experimental comparisons of the

proposed DLSSVM and related trackers with different parameters settings: B50,

B100 and B500 mean the budgets of support vectors are 50, 100 and 500

respectively. The entries in red indicate the best results and the ones in blue

indicate the second best. |

|||||||||

|

SSVM trackers |

OPE |

TRE |

SRE |

Mean FPS |

|

|||

|

precision (20 pixels) |

success (AUC) |

precision (20 pixels) |

success (AUC) |

precision (20 pixels) |

success (AUC) |

|

||

|

DLSSVM-NU |

0.794 |

0.557 |

0.810 |

0.581 |

0.724 |

0.508 |

28.88 |

|

|

DLSSVM-B50 |

0.828 |

0.587 |

0.846 |

0.606 |

0.780 |

0.543 |

10.10 |

|

|

DLSSVM-B100 |

0.829 |

0.589 |

0.856 |

0.610 |

0.783 |

0.545 |

10.22 |

|

|

DLSSVM-B500 |

0.826 |

0.588 |

0.852 |

0.609 |

0.787 |

0.548 |

10.37 |

|

|

Scale-DLSSVM |

0.861 |

0.608 |

0.857 |

0.615 |

0.811 |

0.565 |

5.40 |

|

|

|

|

|

|

|

|

|

|

|

|

SSG |

0.608 |

0.443 |

0.665 |

0.486 |

0.584 |

0.424 |

46.13 |

|

|

Struck |

0.656 |

0.474 |

0.707 |

0.514 |

0.634 |

0.449 |

0.90 |

|

|

Linear-Struck-NU |

0.703 |

0.506 |

0.751 |

0.540 |

0.655 |

0.462 |

1.46 |

|

|

Linear-Struck |

0.792 |

0.556 |

0.824 |

0.589 |

0.736 |

0.515 |

1.20 |

|

2.2 Comparisons with State-of-the-Art

Trackers

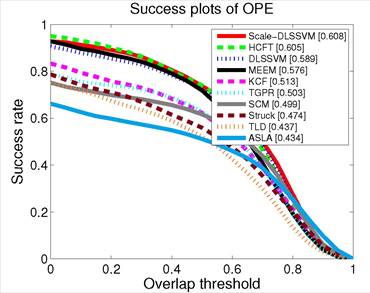

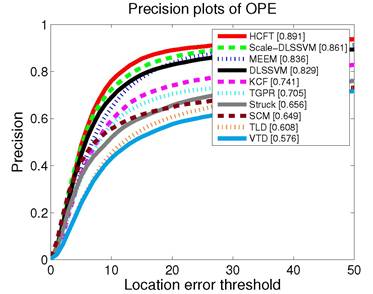

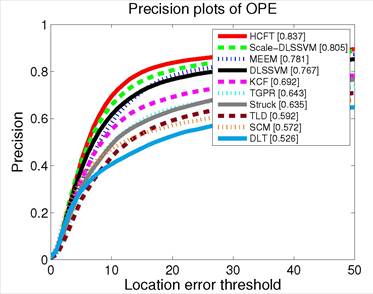

Figure 2. Average precision plot and success

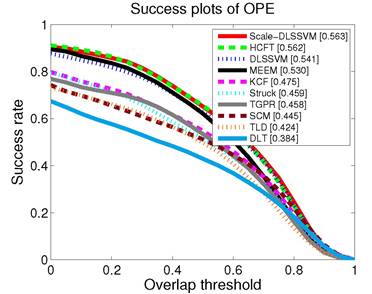

plot for the OPE on the TB50 [1] dataset.

Figure 3. Average precision plot and success

plot (bottom row) for the OPE on the TB100 [2] dataset.

2.3 Download the

experimental data

DLSSVM and Scale-DLSSVM on OTB-50 and OTB-100 (28MB)

3. Matlab source codes

DLSSVM and Scale-DLSSVM (544kb)

References

[1] Y. Wu, J.

Lim, and M.-H. Yang. Online object tracking: A benchmark. In CVPR, 2013.

[2] Y. Wu, J.

Lim, and M.-H. Yang. Object tracking benchmark. PAMI, 37(9):1834-1848, 2015.